Find & Share: Deaf Device Concepts

By Erika Goering,

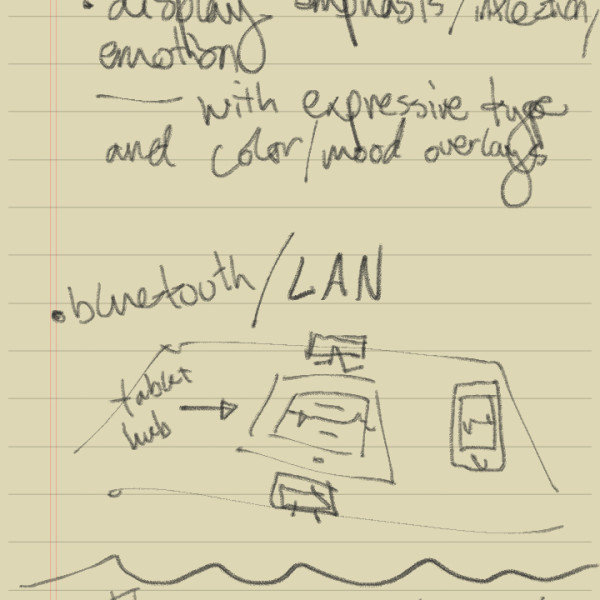

Since I’m in an “anything goes” mindset with this project, I figured it’d be good to share some of the concept devices or peripherals that I’ve stumbled across. I will pull from some of these and apply it to my project in a way that works with my thesis question.

Concept phone that translates what the caller is saying:

Enable Talk Sign Language Gloves

Sign/Voice Language Translator (SVLT)

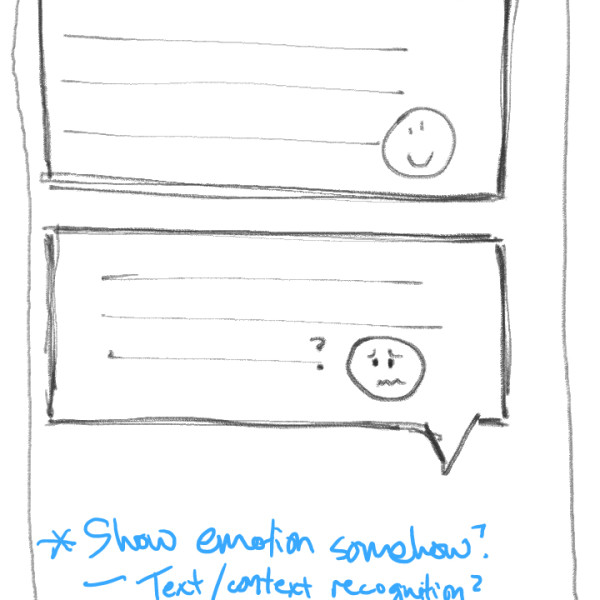

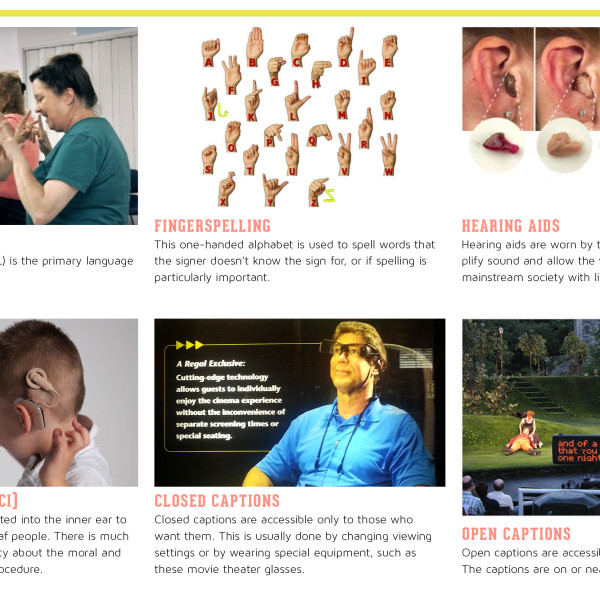

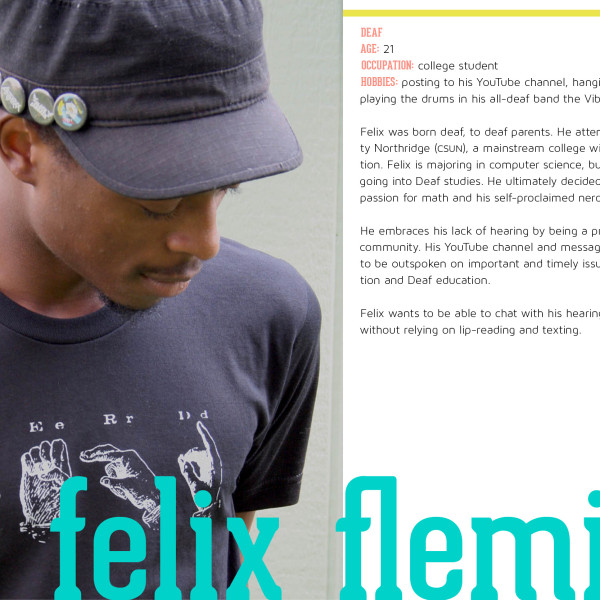

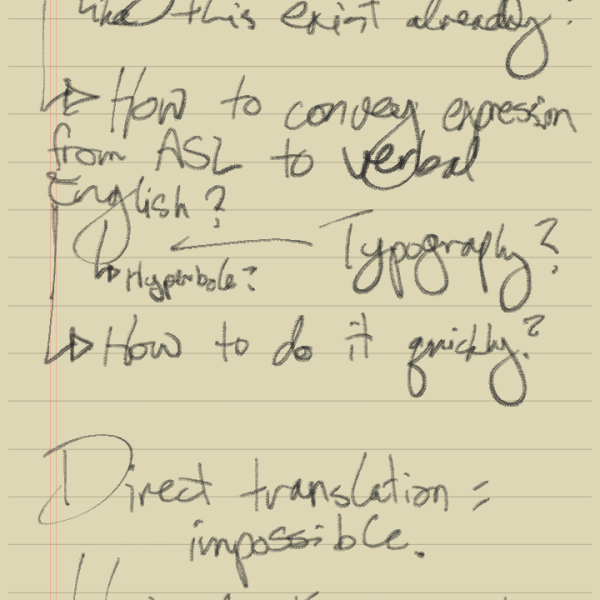

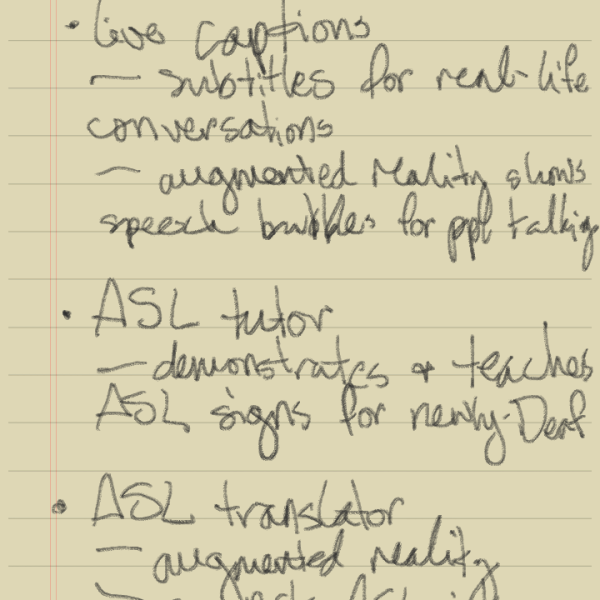

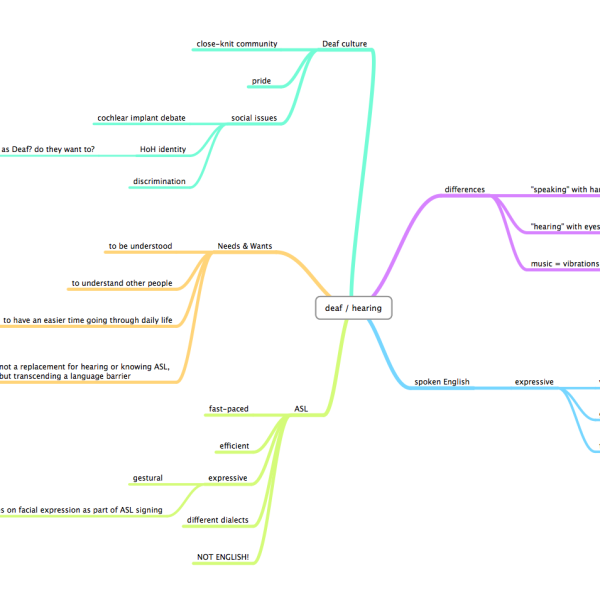

One thing that was suggested the other day was a way of using gloves or rings to detect ASL and send it to translation software. I really like this idea; “smart” rings (and/or conductive fingernail polish??) that can send movement information to a translator. Maybe this, combined with an unobtrusive facial recognition camera (to capture facial expression and emotion) could be a solution. And maybe on the Spoken English-to-Deaf side, there could be a heads-up display (either external, like a Google Glass-style overlay, or internal/invisible, like a contact lens display) that displays transcribed speech.

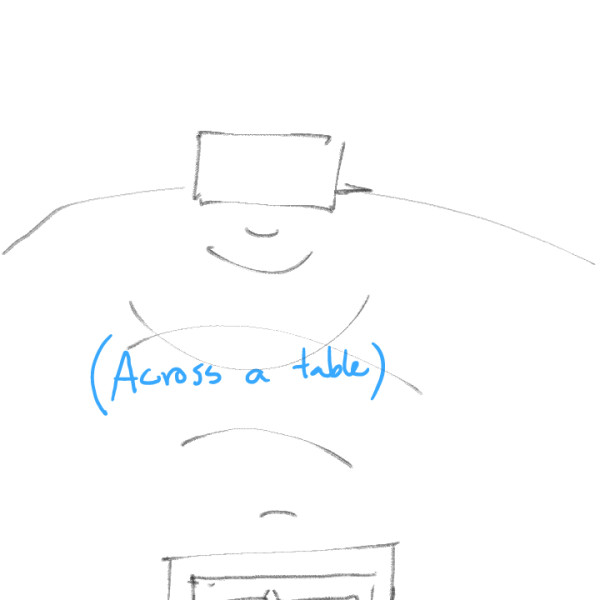

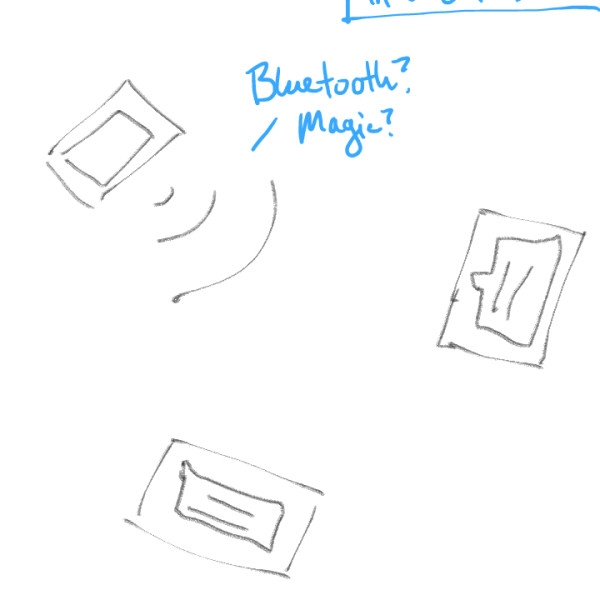

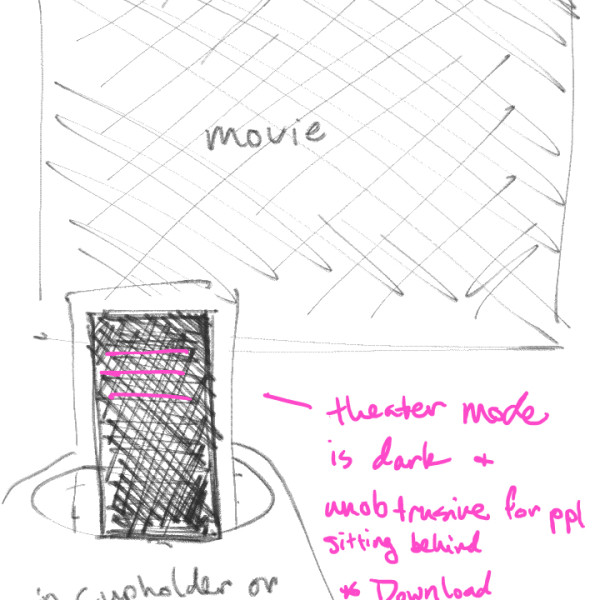

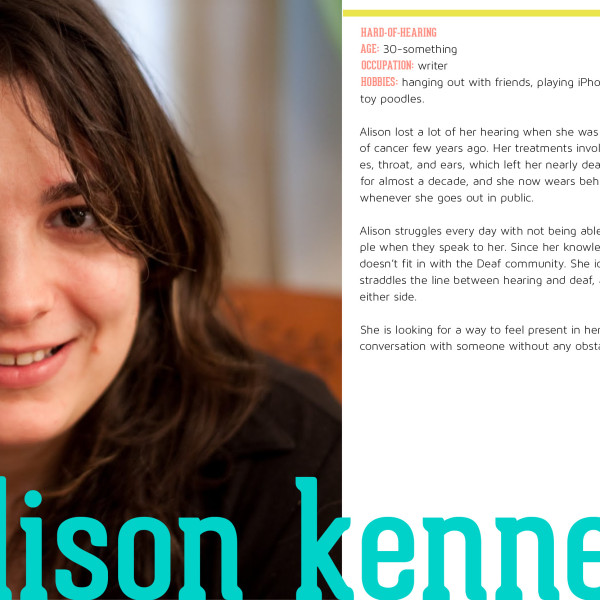

I am starting to gravitate toward the idea of a mobile app that is supplemented by peripherals, instead of just an app by itself using default phone hardware. Making layers of technology invisible and/or well-designed is not only appealing and exciting to me as a geeky designer; it also excites me because I want to create fewer barriers (not more!) between hearing and deaf people. Using technology that can be successfully integrated or work behind-the-scenes in daily life is a very important aspect to consider. It would make all parties involved in the deaf/hearing conversation feel a lot less awkward. And I think it should be my focus.

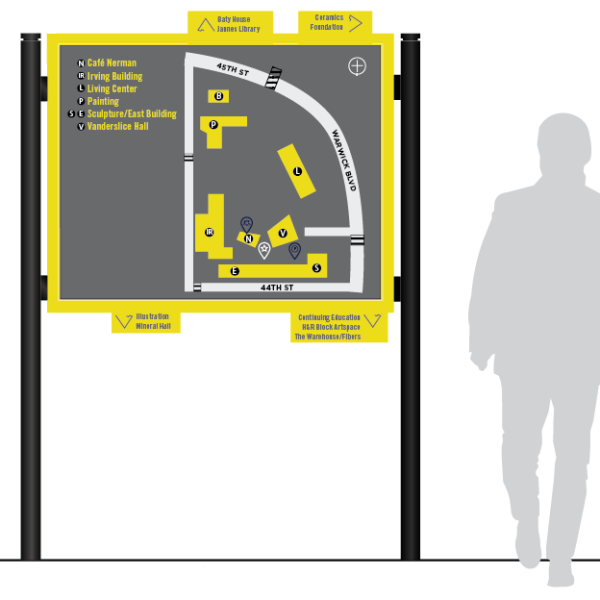

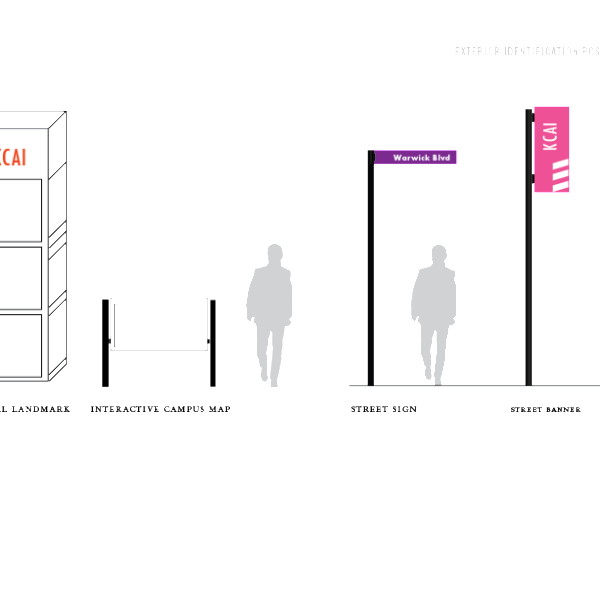

Filed under: Degree Project, Find&Share, KCAI, Learning

Comments: Comments Off on Find & Share: Deaf Device Concepts