One last Deaf Device Find & Share

By Erika Goering,

As evidenced by my unusually fruitful number of find & share posts this semester, it’s pretty clear that the world is looking for technological solutions to Deaf/hearing language barriers.

Here’s one last one that I found the other day:

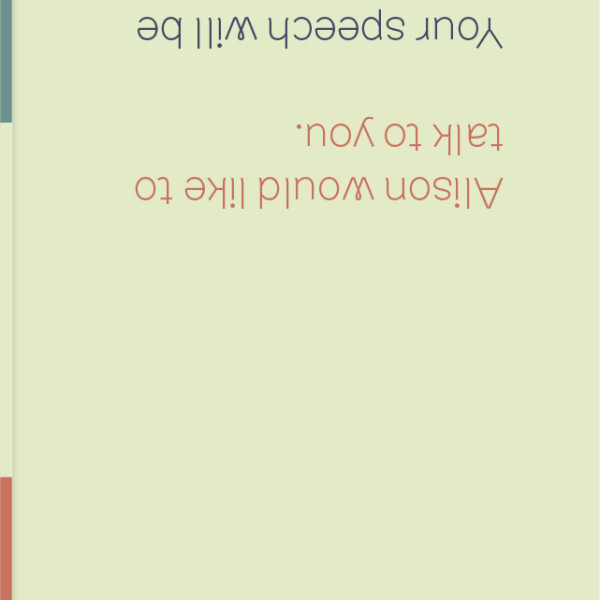

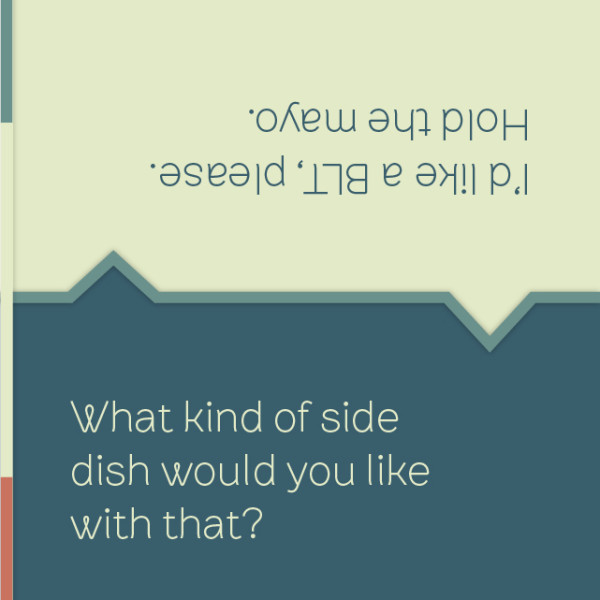

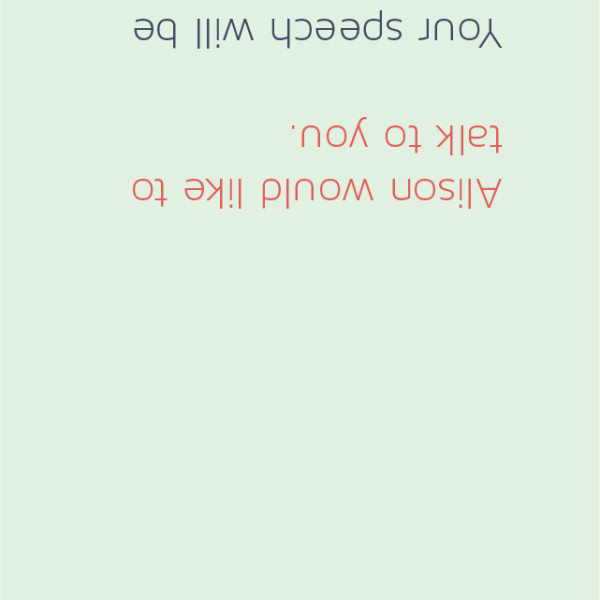

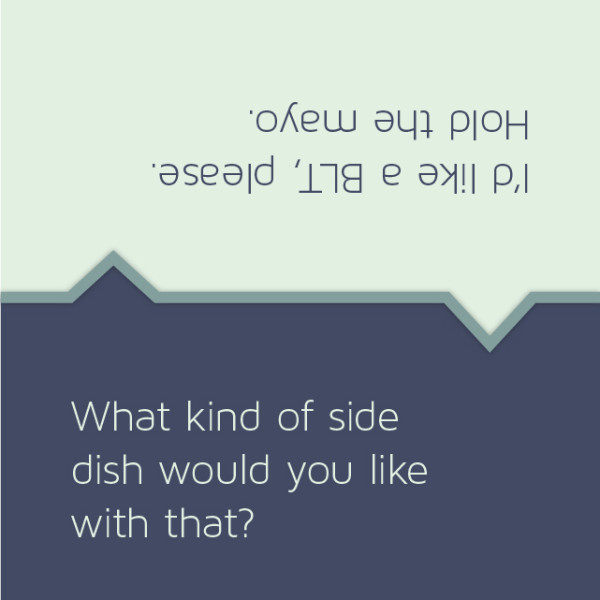

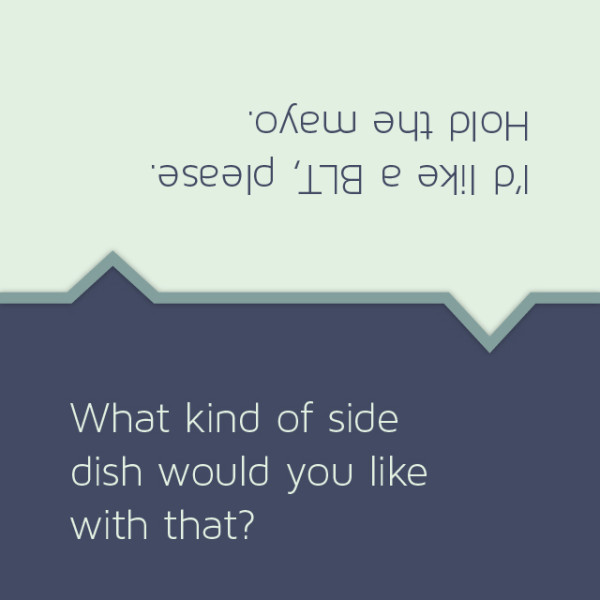

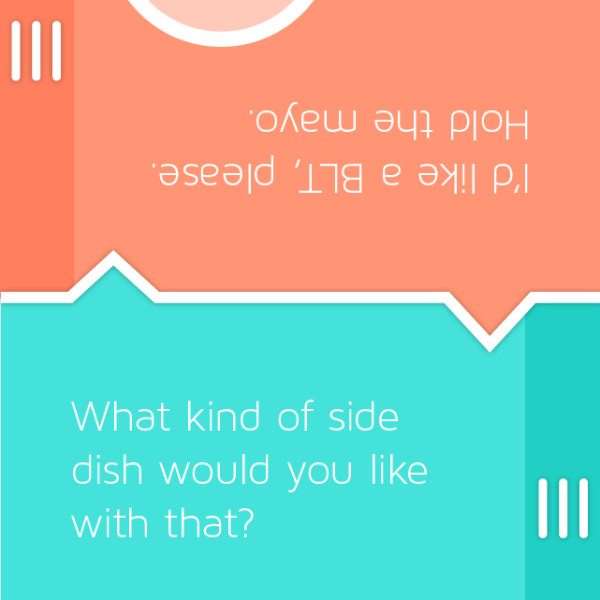

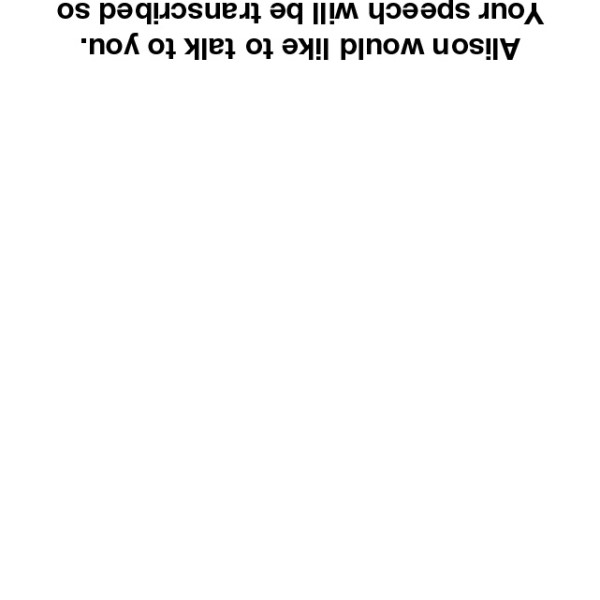

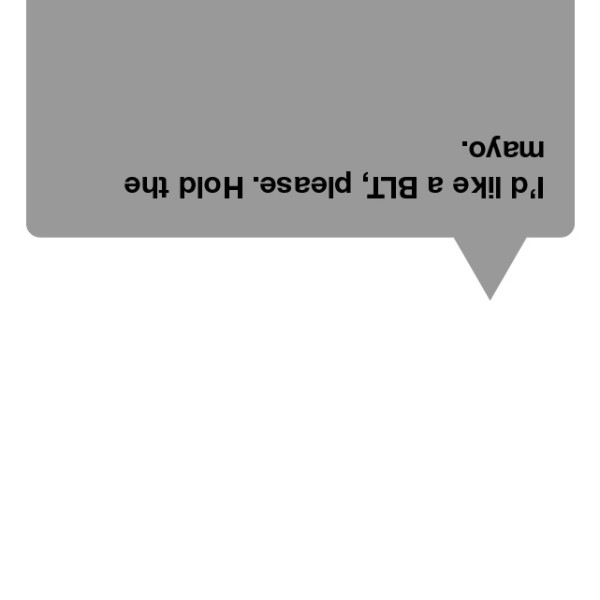

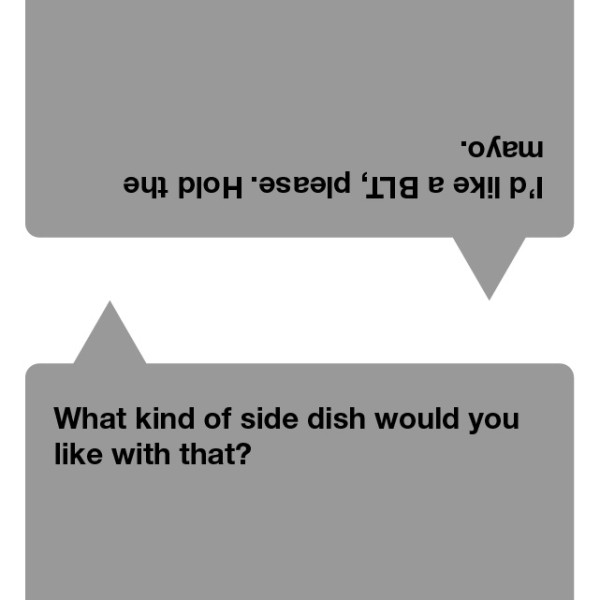

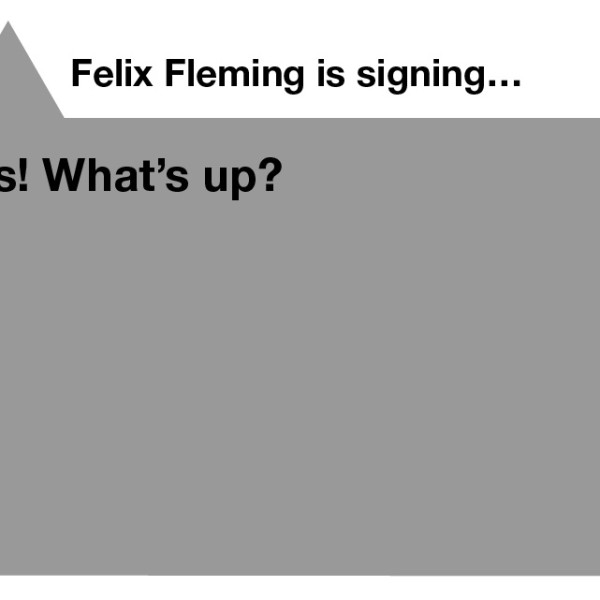

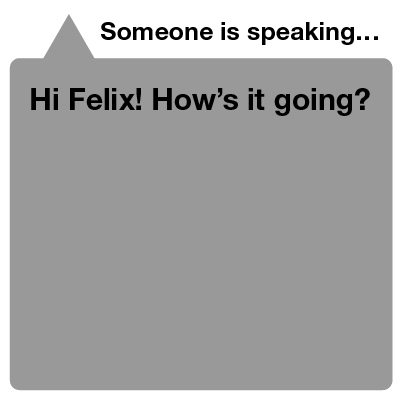

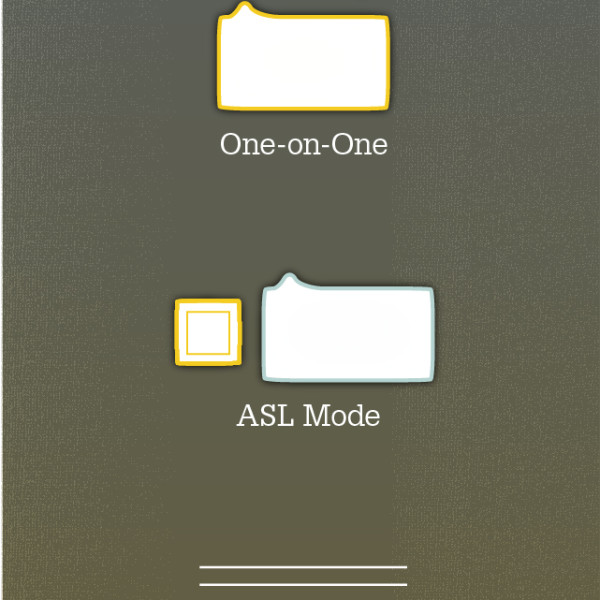

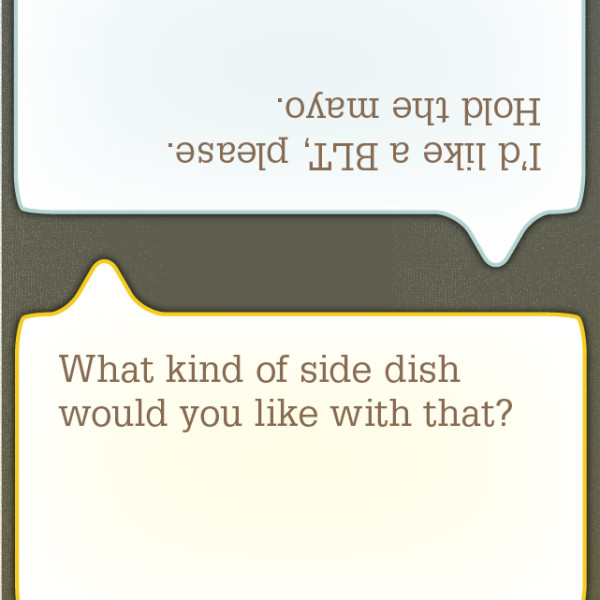

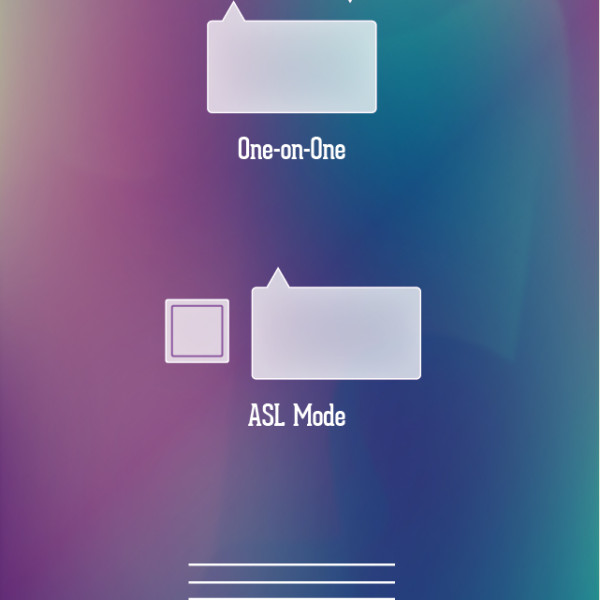

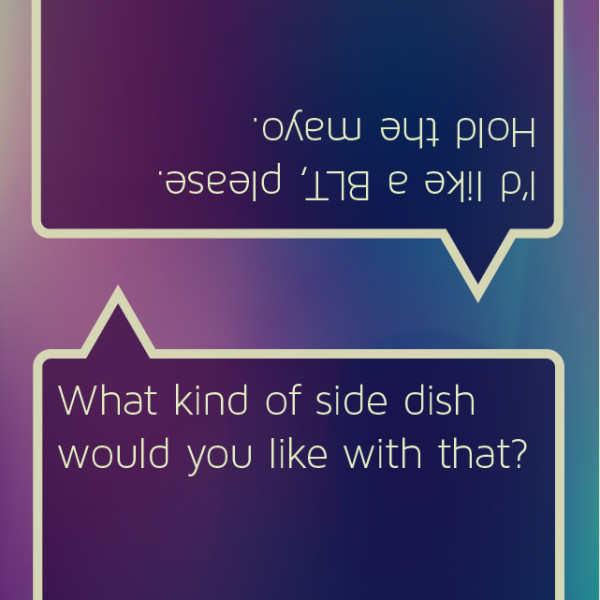

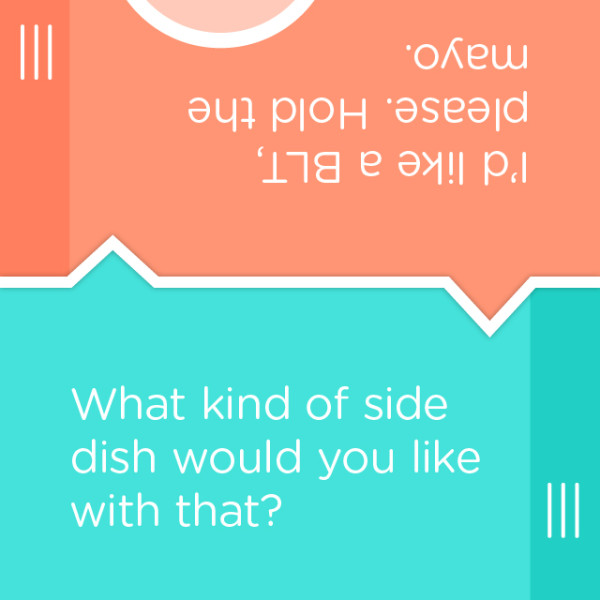

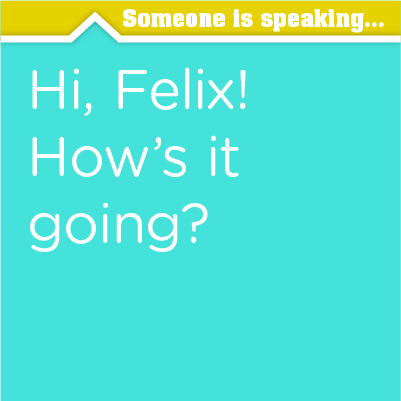

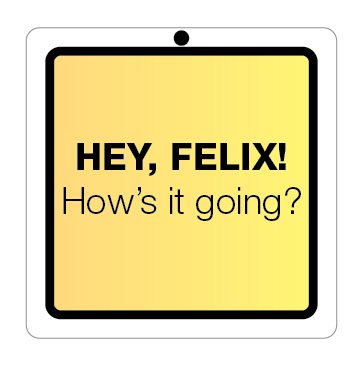

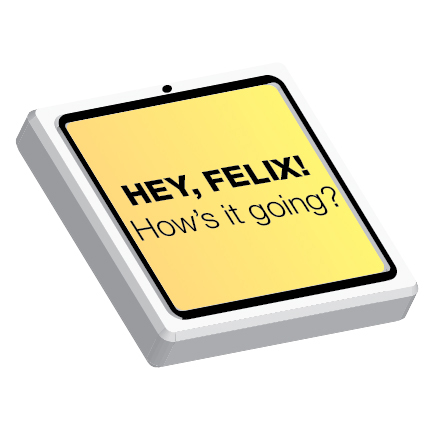

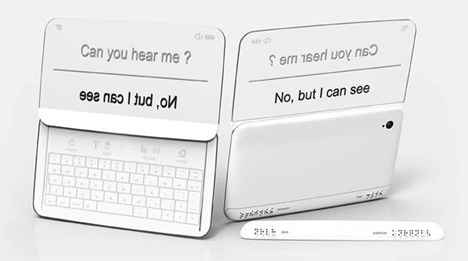

It’s using the same idea I had for directionality, where two people in a conversation can easily read what is directed toward them, and the other person can do the same.

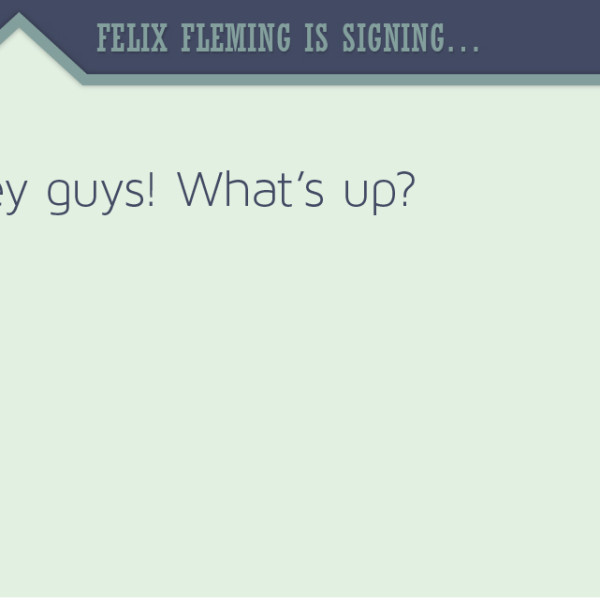

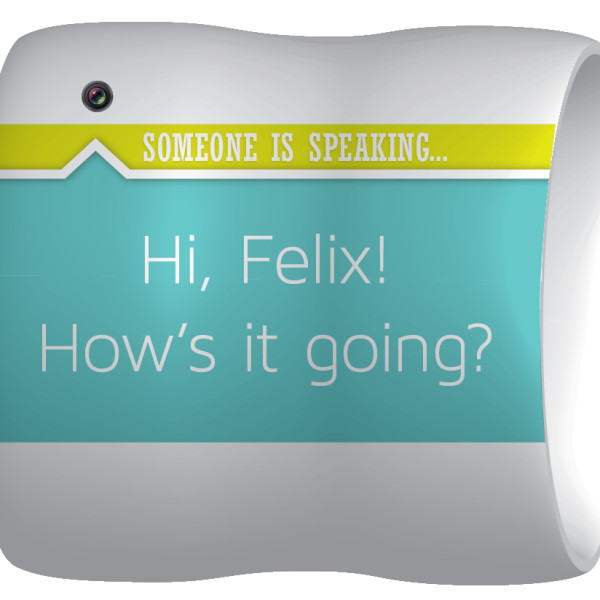

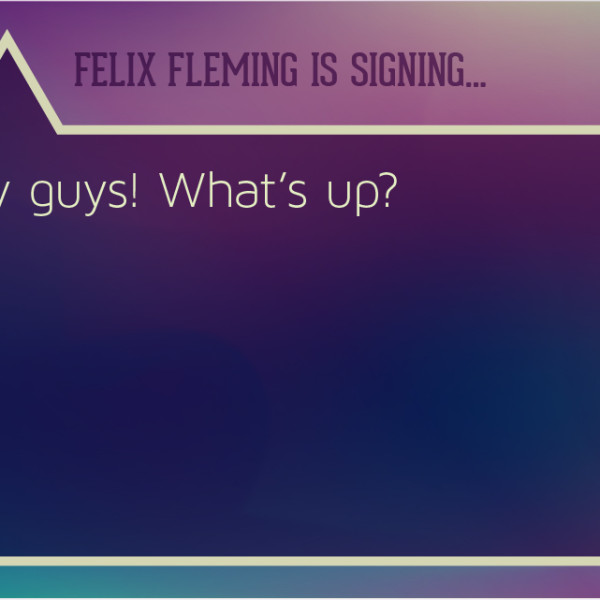

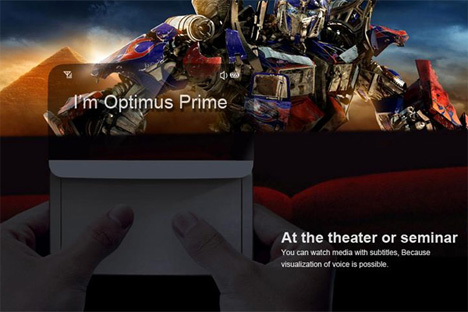

It’s even got a movie mode!

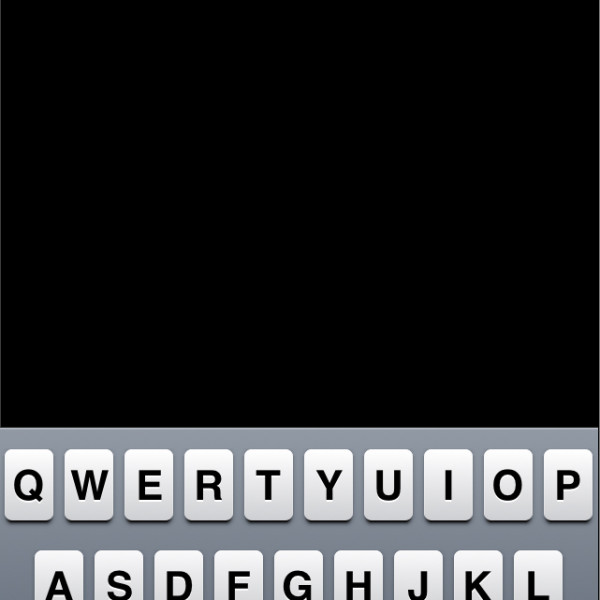

The unique thing about the Beethoven phone is that it also has functionality for blind users! There’s braille output for situations where hearing isn’t an option, but reading is okay (or if, of course, the user is both blind and deaf).

Braille for the blind!

Filed under: Degree Project, Find&Share, KCAI, Learning

Comments: Comments Off on One last Deaf Device Find & Share